AI Ethics Guidelines That Won’t Keep You Up at Night

Meta Description: Master AI ethics guidelines in 2025 with our comprehensive guide covering principles, implementation, and tools for responsible AI development and deployment.

Scrolling through tech news, and suddenly you see another headline about AI gone wrong. Maybe it’s a hiring algorithm that accidentally discriminated against qualified candidates, or a chatbot that started spewing inappropriate content. You pause mid-sip and think, “There’s got to be a better way, right?”

Well, my friend, you’ve just stumbled upon the exact reason why AI ethics guidelines exist – and why they’re about to become your new best friend in the world of responsible technology.

I’ve been diving deep into the AI ethics landscape for years, watching it evolve from a “nice-to-have” afterthought to an absolute business necessity. Trust me, whether you’re a curious beginner trying to understand what all the fuss is about, or a seasoned developer looking to build more responsible systems, this guide has got you covered.

What Exactly Are AI Ethics Guidelines? (And Why Should You Care?)

Let’s start with the basics. AI ethics guidelines are essentially your moral compass for artificial intelligence development and deployment. Think of them as the rulebook that helps you build AI systems that don’t just work well, but work right.

These guidelines aren’t just philosophical fluff – they’re practical frameworks that address real-world challenges like:

- Bias prevention (because nobody wants their AI to be accidentally prejudiced)

- Privacy protection (your data should stay your data)

- Transparency requirements (no more mysterious black boxes)

- Accountability measures (someone needs to take responsibility)

Here’s the thing that surprised me when I first started exploring this field: AI ethics guidelines aren’t trying to slow down innovation. They’re actually designed to accelerate it by building trust and preventing costly mistakes down the road.

The 10 Core Principles That Actually Matter in 2025

✅ 1. Fairness First – Your AI Shouldn’t Play Favorites

Fairness in AI isn’t just about being “nice” – it’s about ensuring your systems treat everyone equitably. This means actively identifying and mitigating biases that might creep into your algorithms.

Real-world impact: Companies using biased hiring algorithms have faced lawsuits costing millions. Fair AI isn’t just ethical; it’s economically smart.

✅ 2. Transparency That Makes Sense – No More Black Boxes

Gone are the days when “the algorithm did it” was an acceptable explanation. Modern AI transparency means being able to explain how and why your AI makes specific decisions.

Pro tip: Tools like IBM’s AI Fairness 360 and Microsoft’s Responsible AI toolkit can help you build explainable systems from the ground up.

✅ 3. Privacy by Design – Data Protection That Actually Works

AI privacy isn’t an add-on feature – it should be baked into your system’s architecture. This means implementing techniques like differential privacy and federated learning to protect user data.

✅ 4. Human Oversight – Keeping Humans in the Loop

Even the smartest AI needs human supervision. This principle ensures that critical decisions always have meaningful human involvement.

✅ 5. Robustness and Safety – Building AI That Won’t Break

Your AI systems need to perform reliably, even when faced with unexpected inputs or adversarial attacks. AI safety measures include robust testing, validation, and fail-safe mechanisms.

The image depicts a comprehensive AI safety testing environment designed for the rigorous evaluation of autonomous systems. The facility is equipped with a central, transparent containment unit simulating a multi-faceted urban scenario, including transportation and infrastructure.

Adjacent workstations are dedicated to specific high-stakes domains: a “Medical Diagnostic” station for assessing AI performance in clinical analysis, and a “Disaster Response” station for evaluating robotic and algorithmic capabilities in emergency situations. The entire space is monitored through a series of integrated display panels that provide real-time data, performance metrics, and risk assessments.

✅ 6. Accountability Structures – Who’s Responsible When Things Go Wrong?

Clear AI accountability means establishing who’s responsible for AI decisions and having processes in place to address problems when they arise.

✅ 7. Environmental Responsibility – Green AI for a Sustainable Future

Large AI models consume significant energy. Responsible AI includes considering the environmental impact of your systems and optimizing for efficiency.

✅ 8. Social Benefit – AI That Actually Helps People

The best AI systems solve real problems and create genuine value for society, not just shareholders.

✅ 9. Continuous Monitoring – Because “Set It and Forget It” Doesn’t Work

AI governance requires ongoing monitoring and adjustment. Your ethical AI framework needs regular check-ups.

✅ 10. Cultural Sensitivity – Global AI for a Global World

AI systems deployed internationally need to respect different cultural values and legal requirements.

Industry-Specific AI Ethics Guidelines That You Need to Know

Healthcare AI Ethics

When you’re dealing with people’s lives, the stakes couldn’t be higher. AI ethics guidelines for healthcare focus heavily on:

- Patient consent and data protection

- Clinical validation and safety

- Bias prevention in diagnostic systems

- Maintaining human clinical judgment

Financial Services AI Ethics

Banks and fintech companies face unique challenges around fairness and discrimination. Key considerations include:

- Fair lending practices

- Transparent credit scoring

- Anti-discrimination measures

- Regulatory compliance

Education Technology AI Ethics

AI governance in education emphasizes:

- Student privacy protection

- Equitable access to AI-enhanced learning

- Preventing algorithmic bias in assessments

- Supporting rather than replacing human educators

| Industry | Primary Focus | Key Regulations | Unique Challenges |

|---|---|---|---|

| Healthcare | Patient safety, privacy | HIPAA, FDA guidelines | Life-or-death decisions |

| Finance | Fair lending, transparency | Fair Credit Reporting Act | Economic impact |

| Education | Student privacy, equity | FERPA, accessibility laws | Developmental considerations |

| Criminal Justice | Due process, bias prevention | Constitutional protections | Liberty and justice impacts |

The Global Landscape: How AI Ethics Varies by Region

European Approach – The AI Act and Beyond

Europe leads with comprehensive AI regulation through the EU AI Act, which categorizes AI systems by risk level and imposes corresponding requirements.

United States Framework – Sector-Specific Guidelines

The US takes a more distributed approach, with different agencies developing AI ethics principles for their specific domains.

Asian Perspectives – Innovation with Responsibility

Countries like Singapore and Japan are developing frameworks that balance innovation with ethical AI requirements.

Top Tools and Platforms for Implementing AI Ethics (The Practical Stuff)

Let me share some tools that actually work in the real world:

Enterprise-Grade Solutions

IBM Watson Governance offers comprehensive AI lifecycle management with built-in ethics checks. It’s like having an ethics officer built into your development pipeline.

Credo AI provides a dedicated platform for responsible AI governance, helping you implement and monitor ethical guidelines across your entire AI portfolio.

Open-Source Tools That Don’t Break the Bank

AI Fairness 360 from IBM gives you a powerful toolkit for detecting and mitigating bias in your models. Best part? It’s completely free.

TensorFlow Federated enables privacy-preserving machine learning, perfect for scenarios where data can’t leave its original location.

Cloud-Based Solutions

Amazon SageMaker now includes responsible AI features that help you implement AI ethics guidelines without rebuilding your entire infrastructure.

Microsoft Responsible AI toolkit integrates seamlessly with Azure, offering bias detection, interpretability, and governance features.

Implementation Roadmap: Your Step-by-Step Guide

✅ Phase 1: Assessment and Planning (Weeks 1-2)

Start by auditing your current AI systems and identifying potential ethical risks. Ask yourself:

- Where might bias creep in?

- What data privacy concerns exist?

- Who would be affected by AI failures?

✅ Phase 2: Framework Development (Weeks 3-4)

Develop your organization’s ethical AI framework based on:

- Industry-specific requirements

- Regulatory compliance needs

- Organizational values and risk tolerance

✅ Phase 3: Tool Implementation (Weeks 5-8)

Choose and implement the right tools for your context. Start with bias detection and transparency tools, then expand to comprehensive governance platforms.

✅ Phase 4: Training and Culture Change (Ongoing)

AI ethics policy development isn’t just about technology – it’s about people. Invest in training your team and building an ethical culture.

Common Pitfalls (And How to Avoid Them)

The “Ethics Theater” Trap

Don’t just check boxes. Real AI accountability means making meaningful changes to your development processes, not just creating impressive-looking policies.

The “One-Size-Fits-All” Mistake

Different AI applications require different ethical considerations. Your content recommendation algorithm needs different safeguards than your medical diagnostic system.

The “Set and Forget” Error

AI governance is an ongoing process, not a one-time project. Regular monitoring and updates are essential.

Measuring Success: KPIs for Ethical AI

How do you know if your AI ethics guidelines are actually working? Here are some metrics that matter:

Quantitative Measures

- Bias metrics across different demographic groups

- Transparency scores for model explainability

- Privacy impact assessments

- Incident response times for ethical issues

Qualitative Indicators

- Stakeholder trust levels

- Regulatory compliance status

- Employee confidence in AI systems

- Customer satisfaction with AI interactions

| Metric Category | Sample KPIs | Target Range | Current Status |

|---|---|---|---|

| Fairness | Demographic parity | ±5% across groups | ✅ Within range |

| Transparency | Explainability coverage | >90% of decisions | ⚠️ Needs improvement |

| Privacy | Data minimization ratio | <30% unnecessary data | ✅ Compliant |

| Safety | Incident response time | <4 hours | ✅ Average 2.5 hours |

The Future of AI Ethics: What’s Coming in 2025 and Beyond

Regulatory Evolution

Expect more comprehensive AI regulation at both national and international levels. The EU AI Act is just the beginning.

Technical Advances

New tools for responsible AI are emerging constantly, making ethical implementation easier and more cost-effective.

Industry Standards

Global AI ethics standards are converging, creating more consistency across different markets and applications.

Building Your AI Ethics Dream Team

Essential Roles

You’ll need people who understand both technology and ethics:

- AI Ethics Officer – Your dedicated ethics champion

- Data Scientists with ethics training – Technical experts who can implement safeguards

- Legal and Compliance specialists – People who understand the regulatory landscape

- Domain experts – Professionals who understand your specific industry context

External Partnerships

Consider partnering with organizations like The Partnership on AI or AI Global for guidance and certification.

Cost-Benefit Analysis: Is Ethical AI Worth the Investment?

Short-term Costs

- Tool implementation and training

- Process modifications

- Additional testing and validation

Long-term Benefits

- Risk mitigation (avoiding costly lawsuits and regulatory fines)

- Brand protection (maintaining customer trust)

- Competitive advantage (attracting ethics-conscious customers and talent)

- Innovation acceleration (building on solid, trustworthy foundations)

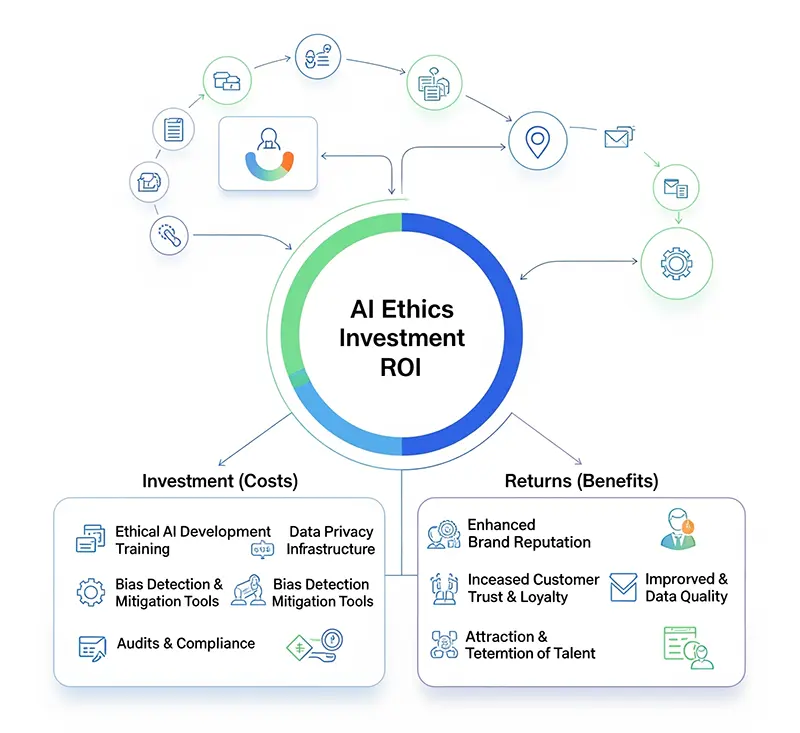

A modern and visual diagram that breaks down the calculation of Return on Investment (ROI) for AI ethics investments.

At the center is a large circular graphic titled “AI Ethics Investment ROI,” which serves as the core of the model, illustrating how the value of these returns is measured against the initial investment to determine profitability.

Two main sections branch out from this central point:

- Investment (Costs): A section on the left details the financial outlays, represented by icons. These include investments in areas like Ethical AI Development Training, Data Privacy Infrastructure, Bias Detection & Mitigation Tools, and Audits & Compliance.

- Returns (Benefits): A section on the right outlines the tangible and intangible benefits that result from these investments. The benefits shown are Enhanced Brand Reputation, Increased Customer Trust & Loyalty, Improved & Data Quality, and Attraction & Retention of Talent.

Real-World Success Stories

Company A: From Bias to Breakthrough

A major tech company discovered bias in their hiring algorithm. Instead of ignoring it, they implemented comprehensive AI fairness measures, resulting in 40% more diverse hiring and improved performance metrics.

Company B: Privacy-First Profits

A healthcare AI startup built privacy protection into their core architecture from day one. This decision helped them win major contracts with privacy-conscious healthcare providers.

Your Action Plan: Getting Started Today

Immediate Steps (This Week)

- Audit your current AI systems for potential ethical issues

- Identify your highest-risk applications that need immediate attention

- Research relevant regulations for your industry and geographic markets

Short-term Goals (Next Month)

- Choose your initial toolkit from the recommended platforms

- Establish basic governance processes for AI development

- Start team training on AI ethics principles

Long-term Vision (Next Quarter)

- Implement comprehensive monitoring systems

- Develop industry partnerships for ongoing guidance

- Create your ethics-first culture that makes responsible AI automatic

Conclusion: Your Ethical AI Journey Starts Now

Here’s the truth I’ve learned after years in this space: AI ethics guidelines aren’t obstacles to innovation – they’re the foundation that makes sustainable, trustworthy innovation possible.

Whether you’re building your first machine learning model or managing a portfolio of AI products, the principles and tools we’ve covered today will help you create technology that works not just for your business, but for everyone who interacts with it.

The companies that embrace responsible AI today will be the ones still thriving when the regulatory landscape fully matures. The question isn’t whether you need AI ethics guidelines – it’s whether you’ll be proactive or reactive in implementing them.

Ready to start your ethical AI journey? Begin with a simple audit of your current systems, choose one tool from our recommended list, and start building the responsible AI culture your organization needs to succeed.

Because at the end of the day, the best AI isn’t just intelligent – it’s intelligently ethical.

What’s your biggest challenge with AI ethics implementation? Drop a comment below and let’s solve it together. And if you found this guide helpful, share it with your team – because ethical AI works best when we’re all working toward the same goal.

Sources and further reading:

Hi there, I apologize for using your form, but I was not sure who to ask for when calling your restaurant, The Awning Company specializes in custom commercial awnings and we have created awnings for the Hollywood Bowl, Dugout at the Padres Stadium, Nick’s and many other well known restaurants in LA, Orange, and San Diego county. If your restaurant is considering redoing your awnings we would love the opportunity to give you a quote, you can reach us online at https://theawningcompanyca.com email at mailto:sales@theawningcompanyca.com or phone at 866-567-8039. Don’t worry I won’t be contacting you a bunch of times, we just wanted to send you a quick note in case it is something your thinking about. Thanks!

order thc edibles online today and enjoy fast delivery